Coaching for performance means shifting from guesswork to guidance. It uses objective data to understand how work gets done, moving conversations away from subjective feedback and toward solving concrete problems based on real workflow patterns.

This approach helps you pinpoint the actual hurdles your team faces, like friction between tools or constant context switching, instead of just speculating about skill gaps.

Moving Beyond Gut Instincts in Team Coaching

Traditional coaching often relies on observation and what people self-report. This approach is subjective and frequently misses the root cause of a performance issue. A manager might see a project lagging and assume the team needs more training when the real problem is something else entirely.

A data-driven strategy cuts through the guesswork. It uses objective metrics to uncover the genuine, often invisible, obstacles that hold teams back.

Identify the Real Bottlenecks

Picture this: a DevOps manager notices their team’s deployment frequency is slowing down. The gut reaction is to assume a technical skill gap. But what if it's not?

Application usage data from a tool like WhatPulse might reveal something different. It could show the team is losing hours every day toggling between three clunky legacy applications to get a single deployment out the door.

This one insight reframes the coaching conversation. Instead of booking generic training sessions, the manager can focus on a specific, solvable process problem. The discussion becomes: "How can we reduce the friction between these tools?"

The data helps pinpoint exactly where time is being spent, showing over- or under-used software and revealing hidden workflow inefficiencies. You can dig deeper into this approach by exploring data-driven decision making.

The Impact of Focused Coaching

When your coaching is grounded in evidence, it becomes more effective and builds trust. Your team sees the goal isn't to micromanage but to remove the barriers that frustrate them and slow down their work.

This shift has a clear business impact. In the Netherlands, for instance, coaching for performance has become a fixture of organisational development. One Dutch bank, FMO, saw perceptions of learning opportunities jump 12.3% after launching new coaching initiatives in 2023. Receptiveness to employee ideas also shot up by 13.8%, showing that data-informed coaching lets managers address real areas for improvement. You can learn more about FMO’s organisational developments.

The objective isn't surveillance; it's clarity. When you can see how work actually happens, you can have honest conversations about making it better. The data just starts the conversation.

This approach gives you a clear path forward, turning vague performance goals into actionable improvement projects that help both the team and the business.

Defining What Good Performance Looks Like

Before you can coach for better performance, you have to agree on what "better" means. This simple concept often gets lost in vague goals like “be more productive” or “improve efficiency.” These phrases sound good in a meeting but are practically useless on the ground. They give your team no clear target to aim for and no way to know if they’re getting closer.

Effective coaching for performance must start with something you can measure, tied directly to a real business outcome. It is about moving from fuzzy ideas to concrete metrics that everyone on the team understands and buys into.

From Ambiguity to Actionable Metrics

For an engineering team, a good goal isn’t just “ship code faster.” It’s more specific, like “reduce build verification time by 15%” or “decrease time spent in Jira ticket administration by three hours per week.” Suddenly, you have tangible targets.

A finance team might aim to “decrease time spent on manual Excel data entry by 20%.” A marketing team could target “reducing the time to create campaign reports by 25%” by getting a handle on their automation tools. Each of these goals is specific, measurable, and tackles a known point of friction in their day-to-day work.

The real objective is to identify a bottleneck that, if removed, would make a noticeable difference in the team's output and daily work life. This is where baseline analytics become essential.

To set goals that are both ambitious and realistic, you first need a clear picture of where you’re starting from.

Establishing a Realistic Baseline

You can’t know what “better” looks like until you know what “now” looks like. You collect baseline data on how your team is actually working today. By instrumenting endpoints with a privacy-first tool, you can gather real-world insights into application usage and workflow patterns without slipping into surveillance.

This data gives you a factual starting point. For instance, you might discover your customer support team spends an average of 10 hours a week per person just toggling between your CRM and a separate knowledge base. That's a measurable problem. Your baseline is 10 hours, and a solid goal could be to slash that to five hours by integrating the two systems.

This data-backed approach changes the coaching conversation. Instead of a manager saying, “I feel like we’re wasting time,” the conversation becomes, “The data shows we spend 10 hours a week on this task. How can we work together to cut that in half?”

From Vague Goals to Specific Performance Metrics

The table below shows how you can reframe common, vague goals into specific, trackable metrics that endpoint analytics can measure.

| Vague Goal | Team Type | Specific Metric to Track |

|---|---|---|

| "Improve team productivity" | Engineering | Time spent in CI/CD pipeline applications vs. IDEs. |

| "Be more efficient" | Finance | Daily usage duration of specific Excel macros versus manual entry. |

| "Speed up our processes" | Marketing | Time spent switching between analytics dashboards and social media tools. |

| "Better software adoption" | IT Support | Percentage of team actively using a newly deployed application. |

This process strips away ambiguity. It creates clear, achievable performance indicators that matter to the business because they’re grounded in the team’s real-world experience. The coaching that follows is focused, relevant, and far more likely to stick.

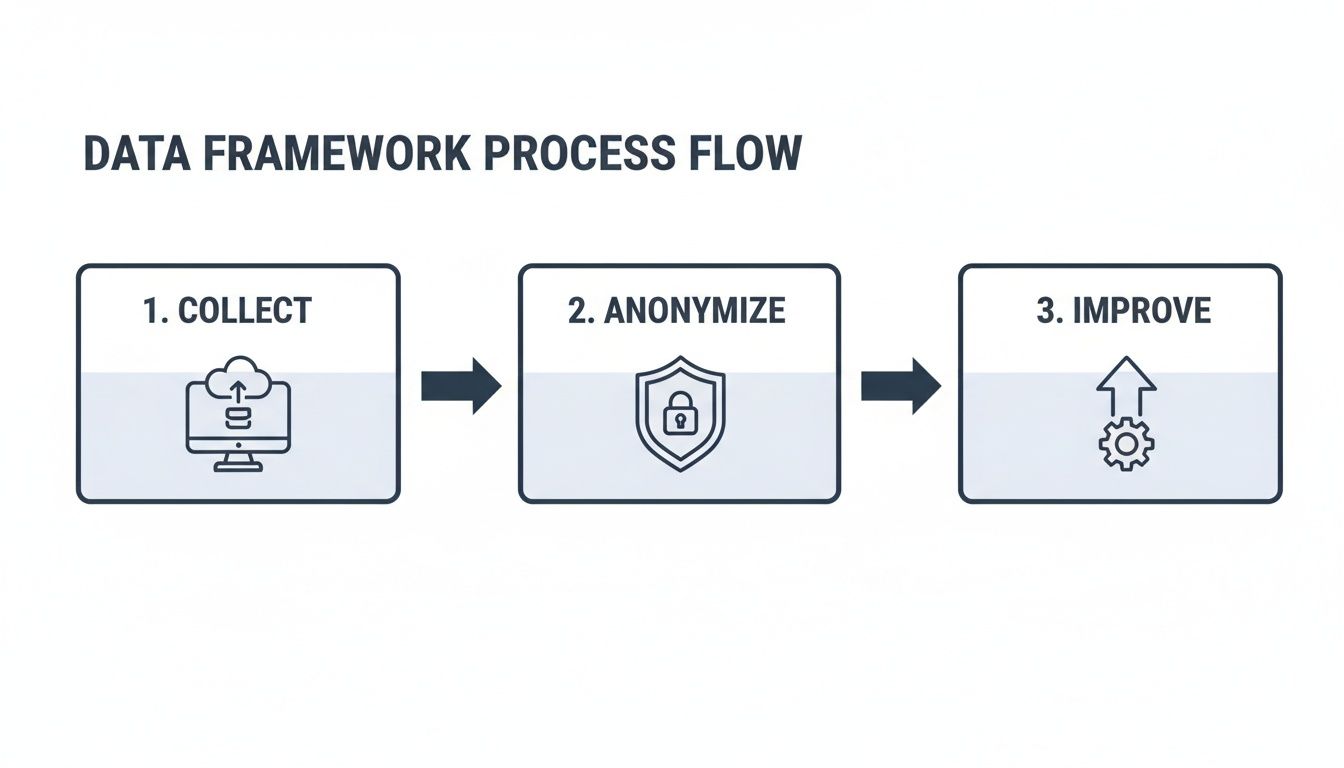

Building a Privacy-First Data Collection Framework

Good coaching needs good data. But how you get that data makes all the difference between a supportive programme and one that feels like surveillance. If you don't build on a foundation of trust from day one, the entire initiative is doomed.

A privacy-first approach is non-negotiable.

You can use a lightweight client like WhatPulse Professional to see how work gets done without ever seeing the work itself. That distinction matters. The goal isn't to spy; it's to gather anonymous, big-picture data on things like application usage, general keyboard and mouse activity, and network traffic. It's about patterns, not people.

The dashboard above shows how application usage can be visualized in an aggregated, non-invasive way. This kind of view helps you spot which tools are actually getting used, for how long, and by how many people—all without peeking over anyone's shoulder.

Securing Team Buy-In with Transparency

Before you install anything, talk to your team. Be completely transparent. They need to know what’s being tracked, what isn't, and exactly how the data will be used. Skip this step, and you've already failed.

Here are a few talking points that have worked for me in those conversations:

- What we track: We’re looking at which applications are open and for how long. We also see overall keyboard and mouse input counts to get a feel for activity levels—we don't care what you type or click on.

- What we DON'T track: We will never record your screen, log your keystrokes, or read your messages. Your individual work is, and always will be, private.

- Why we track it: This data helps us spot team-wide patterns. Are we collectively losing hours a week switching between tools? Do we have an expensive software license that nobody is even using?

- How we use it: This is about improving our processes, not for individual performance reviews. It’s about making our work lives easier, not about watching you work.

Getting the fundamentals right is key. If you're new to this, it's worth exploring a solid data collection methodology to make sure you're building a reliable plan.

Frame the conversation as a tool for support. The data can provide hard evidence to justify changes the team might already feel are needed, like finally getting a better tool or fixing a clunky workflow they’ve complained about for months.

This approach is becoming more common, especially as the coaching landscape in the Netherlands booms. A 2023 study found that Western Europe saw a 51% jump in coaches since 2019, with the Netherlands being a major hub. For businesses here, using anonymous data to coach teams on focus time and reduce context switching is a proven way to boost both retention and revenue.

By focusing on process improvement and being totally transparent, you create a system where data serves the team. You can learn more about how to optimise work patterns with data transparency and WhatPulse. That trust is the real secret to making a data-driven coaching programme work.

Running Data-Informed Coaching Conversations

Gathering the data is just the first step. The value comes when you use that data to spark better conversations—the kind built on curiosity, not accusation. Good coaching for performance isn’t a one-off meeting; it's a continuous cycle of reviewing what’s happening, solving problems together, and trying new things.

Picture this: a manager looks at a dashboard and notices a product manager on their team is spending 40% of their day bouncing between Slack, Jira, and email. The old-school approach would be a vague chat about "staying focused." The data-informed way is a world apart.

This simple, privacy-first process is key. Data is collected and anonymised before it's ever used in a conversation. This builds trust and keeps the focus where it should be: on the process, not the person.

Frame the Conversation Around Discovery

This isn't about pointing fingers. It's about presenting an objective observation and then asking genuinely curious questions.

You could kick things off with something like: "I was looking at the team's application usage, and an interesting pattern popped up. It looks like a lot of time is spent switching between Slack, Jira, and email. What's your take on that? Any ideas what might be behind it?"

This approach invites your team member into a joint investigation. They’re the expert on their own workflow; you just have the data that points to a possible snag.

The goal is not to have an answer. The goal is to form a shared hypothesis with your team member. The data doesn't provide solutions; it just points you toward the right questions to ask.

Maybe the root cause is unclear project briefs that force them to constantly seek clarification in Slack. Or perhaps the Jira notifications are so noisy they create endless interruptions. You'll never know until you ask.

Test Small, Reversible Experiments

Once you have a shared theory—say, "Constant pings from Jira are breaking my focus"—you can come up with a small experiment to test it out. This isn't about blowing up the entire workflow overnight. It’s about making tiny, low-risk changes.

Here are a few examples of small experiments you could try:

- Time Blocking: Block out two 90-minute "deep work" sessions each day and turn off all notifications during those windows.

- Notification Batching: Tweak Jira settings to send a summary email once an hour instead of instant alerts for every single update.

- Dedicated Channels: Create a specific Slack channel just for urgent, must-see updates, cutting down the noise in general project channels.

The idea is to try one small thing, then use the data to see if it actually helped. A week later, you can look at the dashboard again. Did the time spent context-switching go down? Did time inside the primary work app go up? The data gives you a clear answer, helping you decide whether to adopt the change or try a new experiment.

This approach is particularly useful for IT and engineering leads. In the Netherlands, where the skills gap between top and low performers is widening, using endpoint data to coach people on tool adoption and solve hybrid work bottlenecks is becoming a must-have. Organisations that lean into data-driven coaching have seen engagement jump by over 20%.

When you're dealing with big datasets for these experiments, some tools can make life easier. If you’re working in spreadsheets, for instance, a practical guide to using AI in spreadsheets for data management can help automate some of the number crunching. This cycle—observe, hypothesise, experiment, measure—turns performance management from a dreaded annual review into an ongoing, collaborative process of getting better together.

Measuring the Impact of Your Coaching Program

So, you've launched your data-driven coaching programme. How do you know if it's working? Individual improvements are great, but the real proof comes when you can connect those small wins to bigger business outcomes. The data you're collecting needs to tell a story that goes beyond one person's workflow and shows a clear return on investment.

The trick is to directly link the metrics you chose back in the goal-setting phase to tangible business results. It’s not enough to see that someone is spending less time switching between apps. You have to ask the next logical question: what did that newfound efficiency make possible for the business?

Connecting Coaching to Business Outcomes

This is where you move from tracking operational metrics to strategic ones. Instead of just looking at application usage, you start measuring how that change in usage affects team-level goals.

Here’s what that looks like in the real world:

- Engineering Team: Your coaching helped slash context switching and streamline tool usage. Did that effort translate into a 10% increase in deployment frequency over the last quarter?

- Finance Team: During your coaching sessions, you spotted several expensive, underused software licences. Did that discovery lead to a measurable drop in the department's annual software spend?

- Support Team: You focused your coaching on improving knowledge base access and CRM workflows. As a result, did the average time-to-resolution for customer tickets decrease by 15%?

These are the kinds of results that get an executive's attention because they directly affect the bottom line. You can explore a whole range of human resource KPIs to find other metrics that might align with your specific business goals.

Gathering Qualitative Feedback

But numbers alone don't tell the full story. A successful coaching programme should also improve how people feel about their work. It ought to be removing daily frustrations and giving them the tools and confidence to solve their own problems. This qualitative impact is just as important, but you have to ask for it.

In your one-on-ones or team retrospectives, be direct with your questions:

- "Since we started focusing on reducing tool friction, has your day-to-day work felt smoother?"

- "What's the single biggest process improvement we've made together in the last month?"

- "Do you feel like you have more time for deep, focused work now?"

This feedback loop is non-negotiable. A coaching programme shouldn't be a static, set-it-and-forget-it system. It's a living process.

Based on both the hard data and the human feedback you receive, you have to be ready to adjust your approach. The data might show an experiment didn't pan out, or your team might tell you a new process created an unexpected bottleneck somewhere else.

That’s not failure; that’s learning. Use that information to continuously refine your goals, the metrics you track, and the coaching conversations themselves.

Answering the Tough Questions on Data-Driven Coaching

Bringing data into coaching conversations is a big shift. It's natural for your team to have questions. Moving from subjective feedback to something more objective can feel strange at first, and it’s smart to be ready for concerns about privacy, buy-in, and whether it will even work.

Isn't This Just a Fancy Name for Employee Monitoring?

Not at all. The real difference comes down to intent and method. Employee monitoring is often about surveillance—capturing screen content or logging every single keystroke. That's not what this is.

True coaching for performance, when done with a privacy-first tool, is about aggregating anonymous metadata. It looks at which applications are used and for how long, but it never sees what you’re typing or what’s on your screen. The goal is to spot systemic friction in your team’s workflow, not to micromanage individuals. The conversation becomes about the ‘how’ of the work, not the ‘who’.

How Do I Get My Team on Board?

Trust is your currency here, and you build it through complete transparency. Be upfront from day one about what data is being collected, why you’re collecting it, and exactly how it will be used to make their work lives better.

Don't just tell them; show them. Walk them through the dashboard and point out that the focus is on team-level patterns and process optimisation, not individual report cards.

Frame it as a tool to help them get rid of the roadblocks they face every day. The data provides objective evidence to back up the changes they've probably been asking for, like ditching a clunky legacy app or carving out more uninterrupted focus time. Once that first coaching session leads to a positive change the team actually wanted, trust will start to build on its own.

What Results Can I Realistically Expect?

You’ll likely see some quick wins right out of the gate. A common one is spotting and uninstalling unused software licences, which translates into immediate cost savings. Simple, but effective.

After a few coaching cycles, you should start seeing the needle move on your team-specific metrics. This could look like less time lost to context switching between apps or seeing repetitive tasks get done much faster.

The long-term benefits are where things get really interesting. Higher engagement is a big one because your team feels heard and empowered to fix the frustrating process issues that used to slow them down. In fact, businesses in the Netherlands that have put these strategies into practice have seen employee development scores jump by 12-14%. That kind of improvement has a direct line to better retention and productivity, proving the sustained value of the programme.

Ready to stop guessing and start building a team that truly performs? See how WhatPulse gives you the privacy-first data you need to coach with confidence. Discover WhatPulse Professional today.

Start a free trial